- Date

Launch a GPU-backed Google Compute Engine instance and setup Tensorflow, Keras and Jupyter

Introduction

Back in June I became a student of the Udacity Self-Driving Car Nanodegree program. It’s a nine months long curriculum that teaches you everything you need to know to work on self-driving cars. Topics include:

- Deep Learning

- Computer Vision

- Sensor Fusion

- Controllers

- Vehicle Kinematics

- Automotive hardware

Our first project was to detect lane in a video feed and most of the students from my batch are now very deep into the deep learning classes.

These classes rely on Jupyter notebook running Tensorflow programs and I learned the hard way that the GeForce GT 750M on my Macbook Pro and its 384 CUDA cores were not going to cut it.

Time to move on to something beefier.

AWS vs GCP

All students get AWS credits as part of the Udacity program. It’s the de facto platform for the course and all our guides and documentation are based on it.

However I’m a longtime fan of GCP, I’m already using it for some of my personal projects and I’m constantly looking for new excuses to use it.

This time I wanted to take advantage of these nifty features of the platform:

- Pre-emptible VMs with fixed price.

- Sustained-use discount.

- Sub-hour billing.

I thought I might end up with a pretty cheap deep learning box and also (mostly?) it seemed like I would be in for a good learning experience and some fun.

After some research I quickly discovered that Pre-emptible VMs can’t have GPUs attached and that my predicted monthly usage was definitely not going to trigger sustained-use discount.

Oh well. Looks like it’s only going to be for the learn & fun then. 😅

Pricing

Before we dive in, a quick note about pricing. I settled on the setup below for the needs of this course:

- n1-standard-8 (8 vCPU, 30GB RAM): $0.49/hour

- Tesla K80: $0.70/hour

- 50GB Persistent SSD: ~0.012/hour

- Zone: europe-west-1b

Total per hour: $1.20/hour

Total for average CarND student usage (15h/week/month): $77

Total for a full month (sustained use discount applied): $784.40

I haven’t done any benchmarking nor did I try to optimise this setup in any way. It might be overly powerful for the purpose of this Nanodegree, or on the contrary not powerful enough, so feel free to tweak if you have a better idea of what you are doing. 😉

The guide

Let’s get started.

Your first step will be to launch an instance with GPU on Google Compute Engine. There are two versions of this guide, one for Web UI lovers and another one for people who don’t like to leave their terminal.

In both cases this guide assumes you already have a Google account, if you don’t please create one here first.

Launching an instance – Web console edition

Creating a project

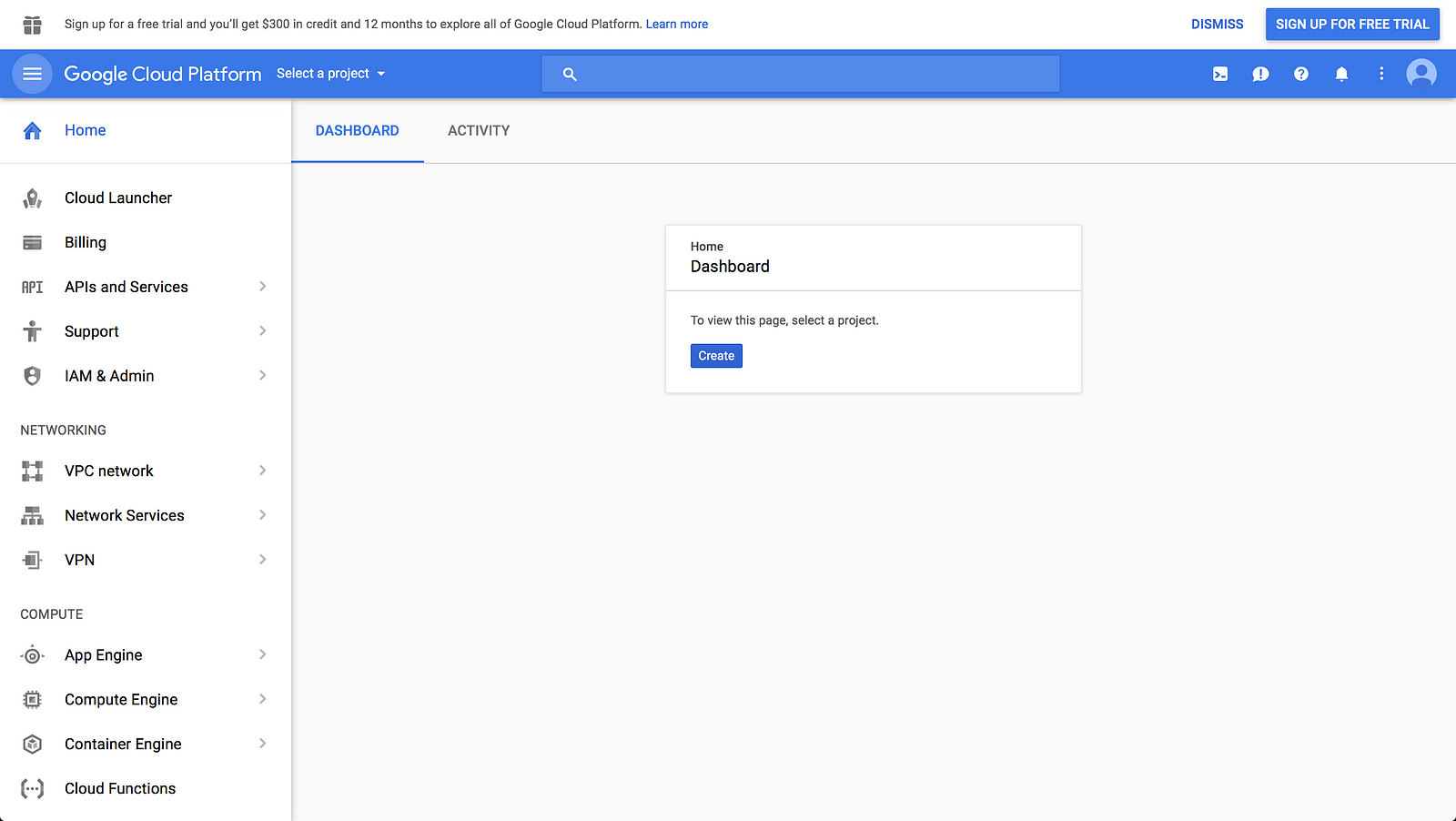

Head to https://console.cloud.google.com.

If it’s your first time using Google Cloud you will be greeted with the following page, that invites you to create your first project:

Good news: if it’s your first time using Google Cloud you are also eligible for $300 in credits! In order to get this credit, click on the big blue button “Sign up for free trial” in the top bar.

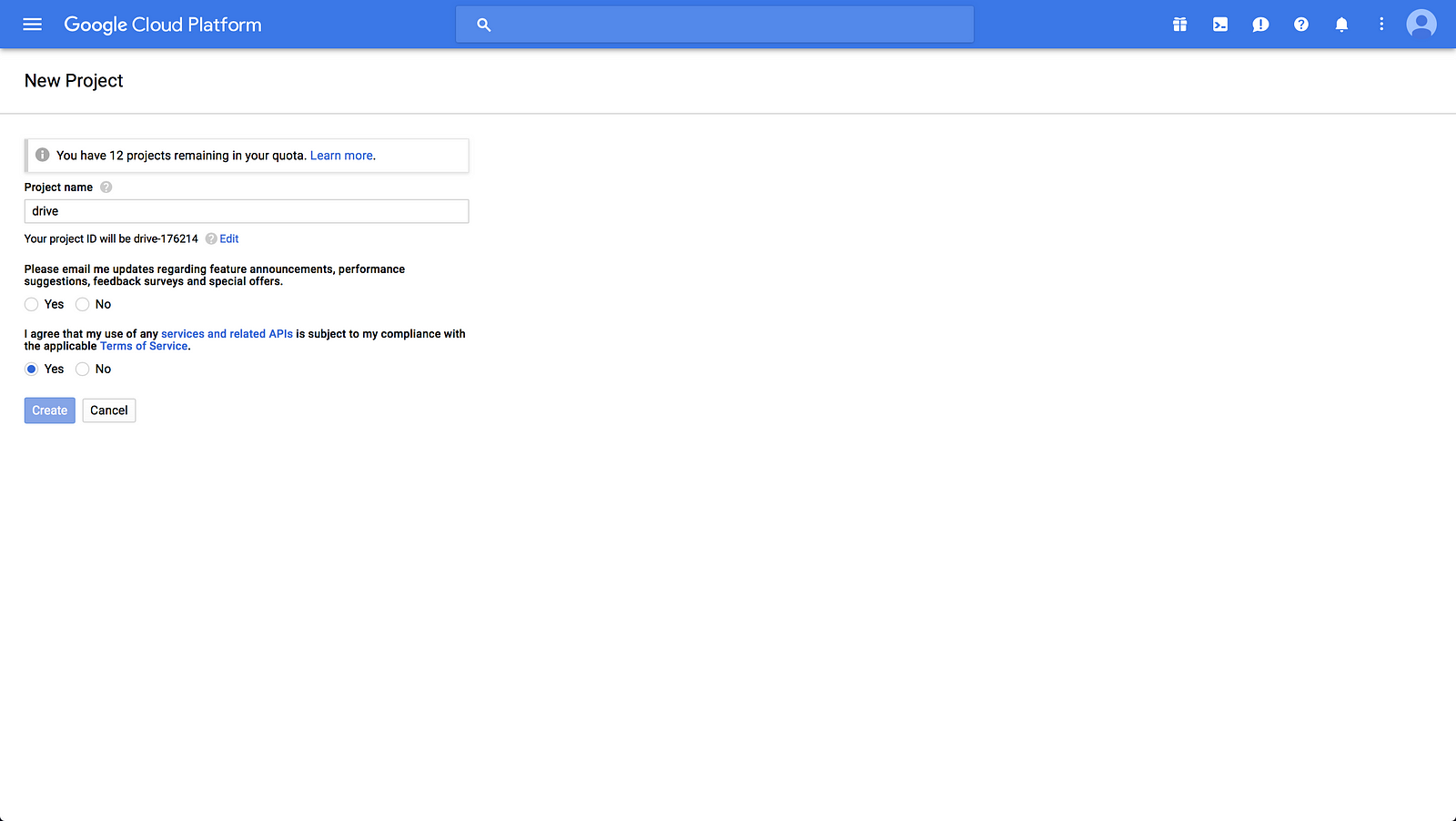

Click on “Create” and you will get to the page shown in the image below.

If you are already a GCP user, click on the project switcher in the top menu bar and click on the “+” button in the top right of the modal that shows up.

Choose a name for your project, agree to the terms of service (if you do!) and click on “Create” again.

If you are already using Google Cloud Platform you most likely already have a billing account and so you can select it on the project creation form.

If you are a new user, you will have to create a billing account once you project is created (this can sometime take a little while, be patient!). This as simple as giving your credit card details and address when you are prompted for it.

Use the project switcher in the menu to go to your project’s dashboard.

Requesting a quota increase

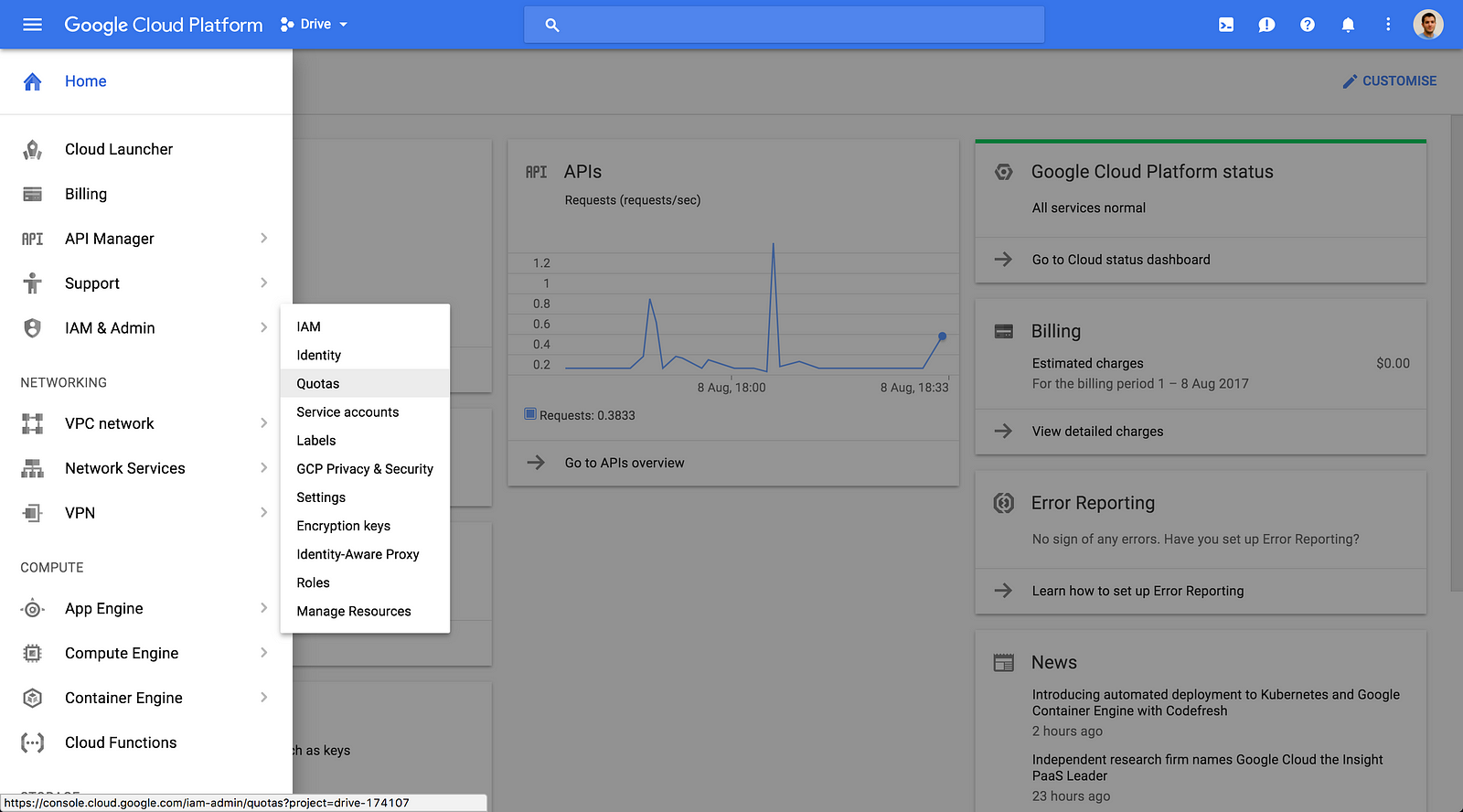

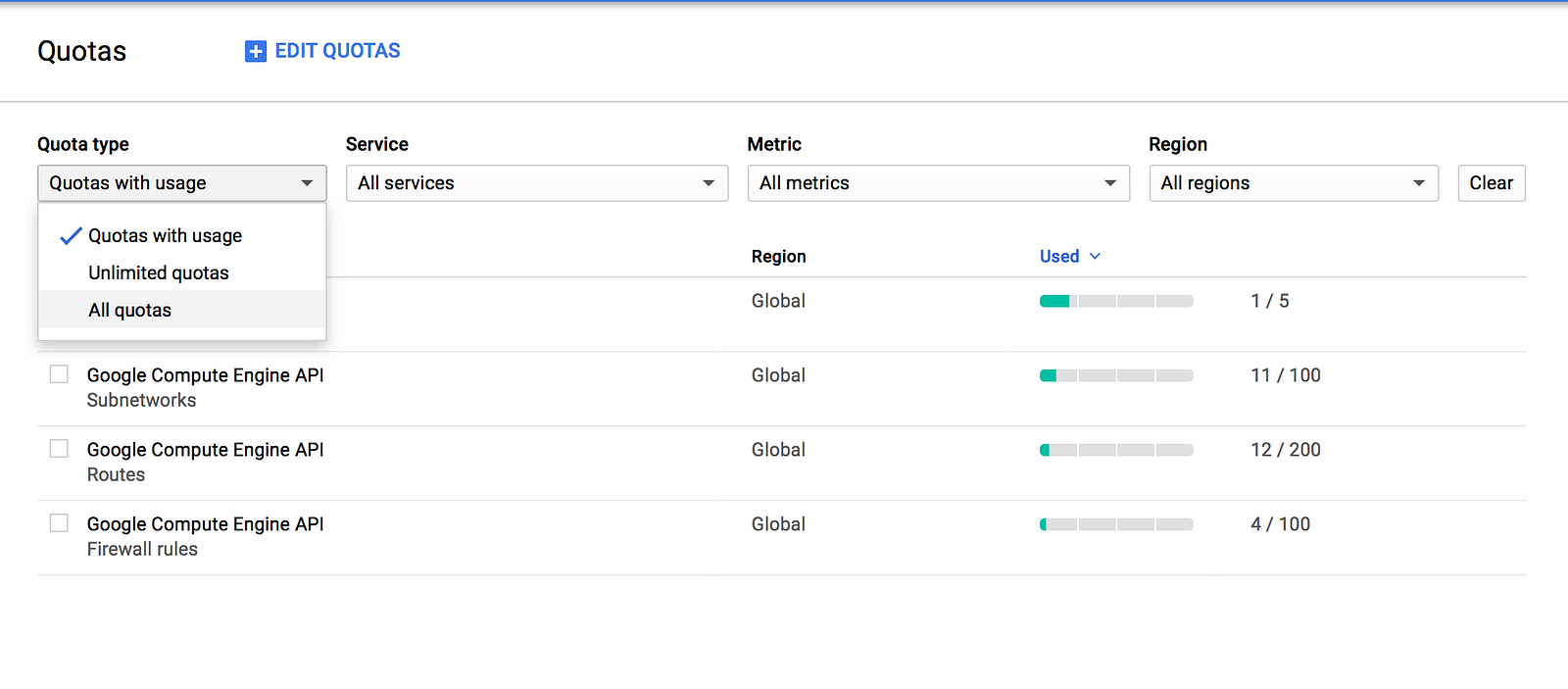

Before you create your first instance you need to request a quota increase in order to attach a GPU to your machine. Click on the side menu (a.k.a the infamous hamburger menu) and head to “IAM & Admin” > “Quotas”.

Display all the quotas using the “Quota type” filter above the table. Select “All quotas”. Then use the “Region” filter to select your region.

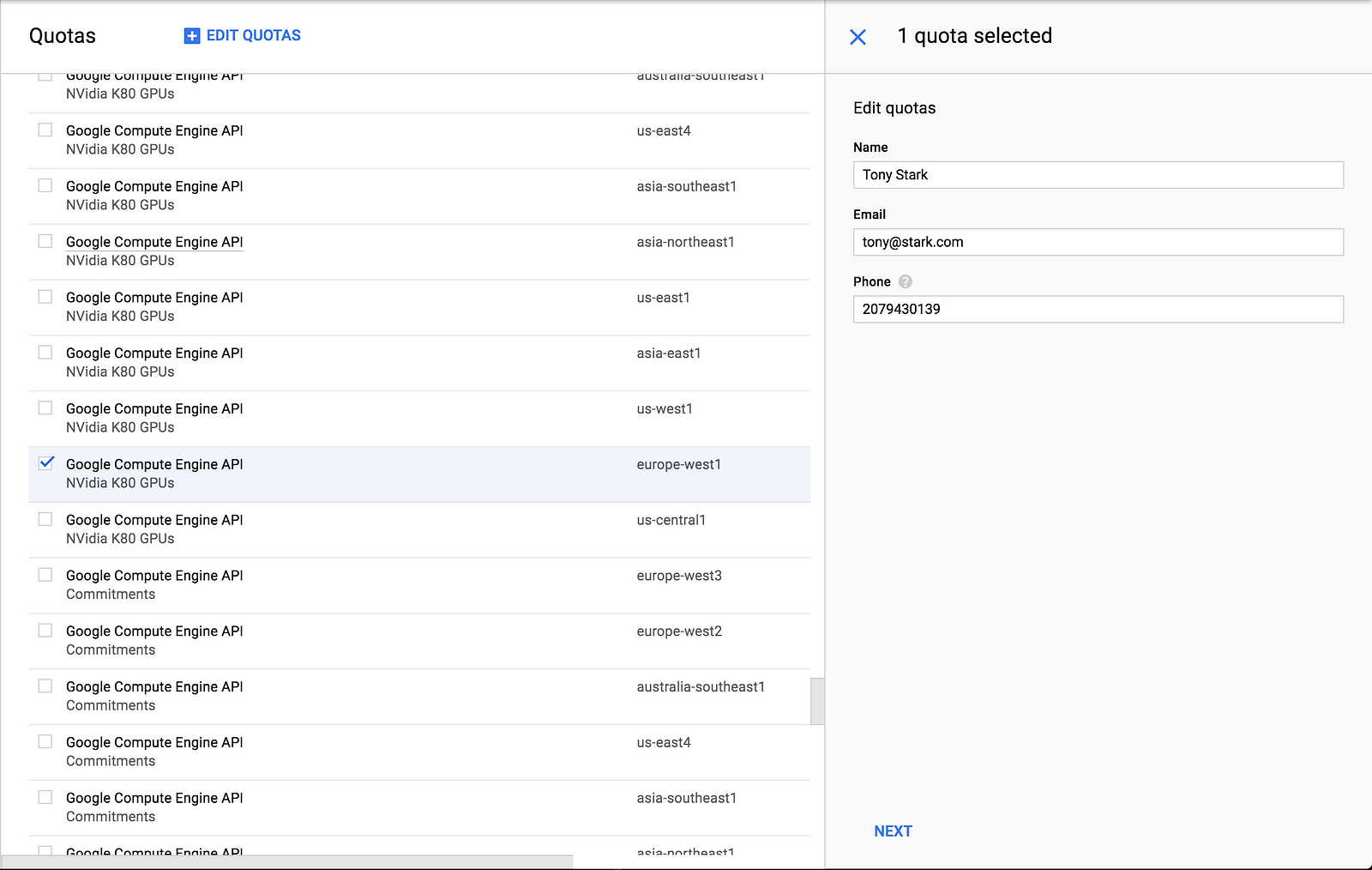

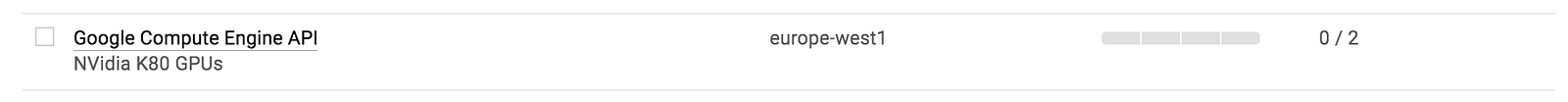

Look for “Google Compute Engine API - NVIDIA Tesla K80 GPUs” in the list, select it and click on “Edit quotas” at the top.

In the menu that opens on the right, enter your personal details if they are not already pre-filled and click on “Next”. Fill the form asking you how many GPUs you want and the reason for requesting a quota increase with these details:

- GPUs requested: 1

- Reason for quota increase: “Training deep neural networks using GPUs”

After a while (should be quite short) Google will approve your request. You are now ready to move to the next step!

Launching a VM instance

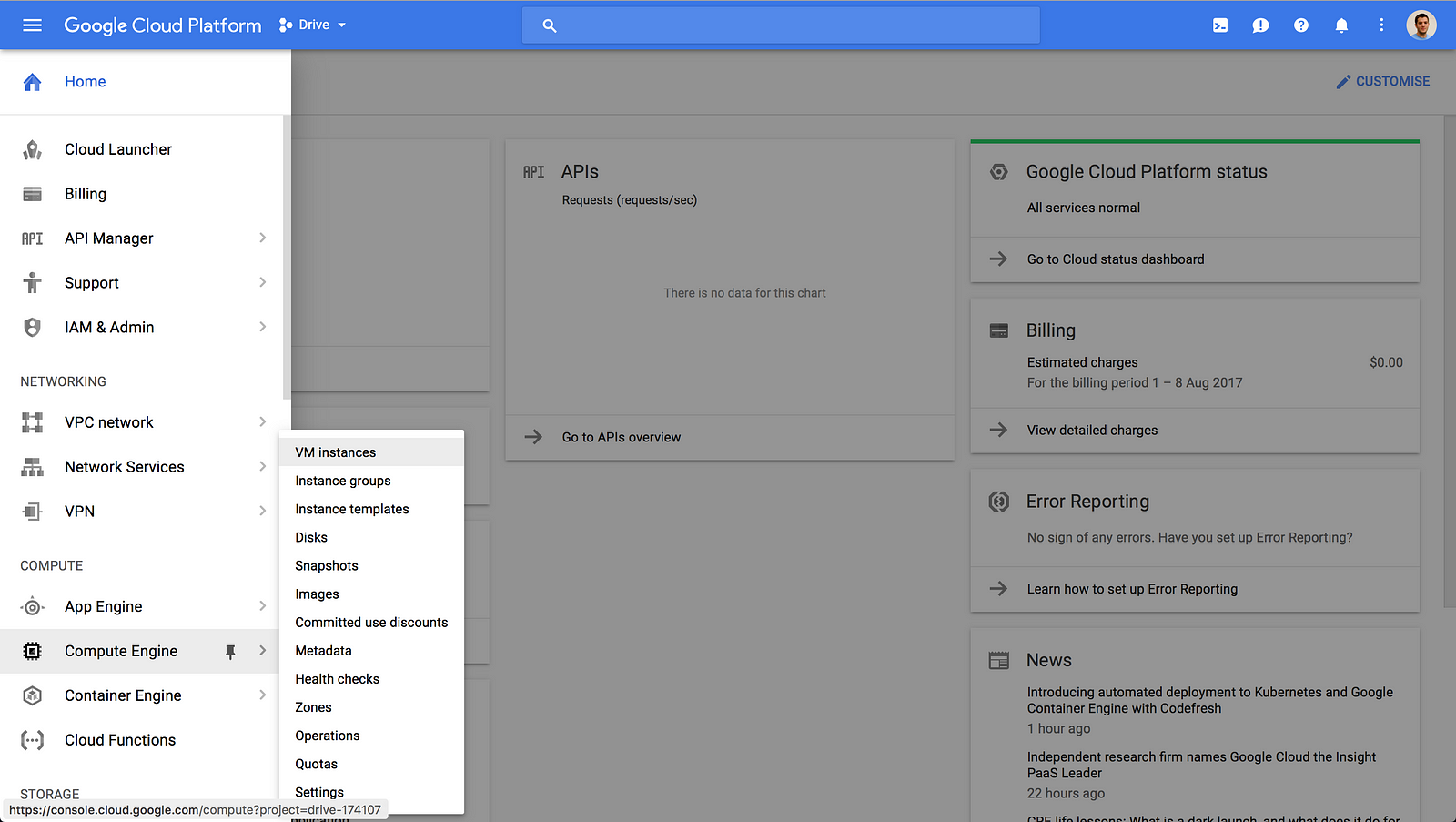

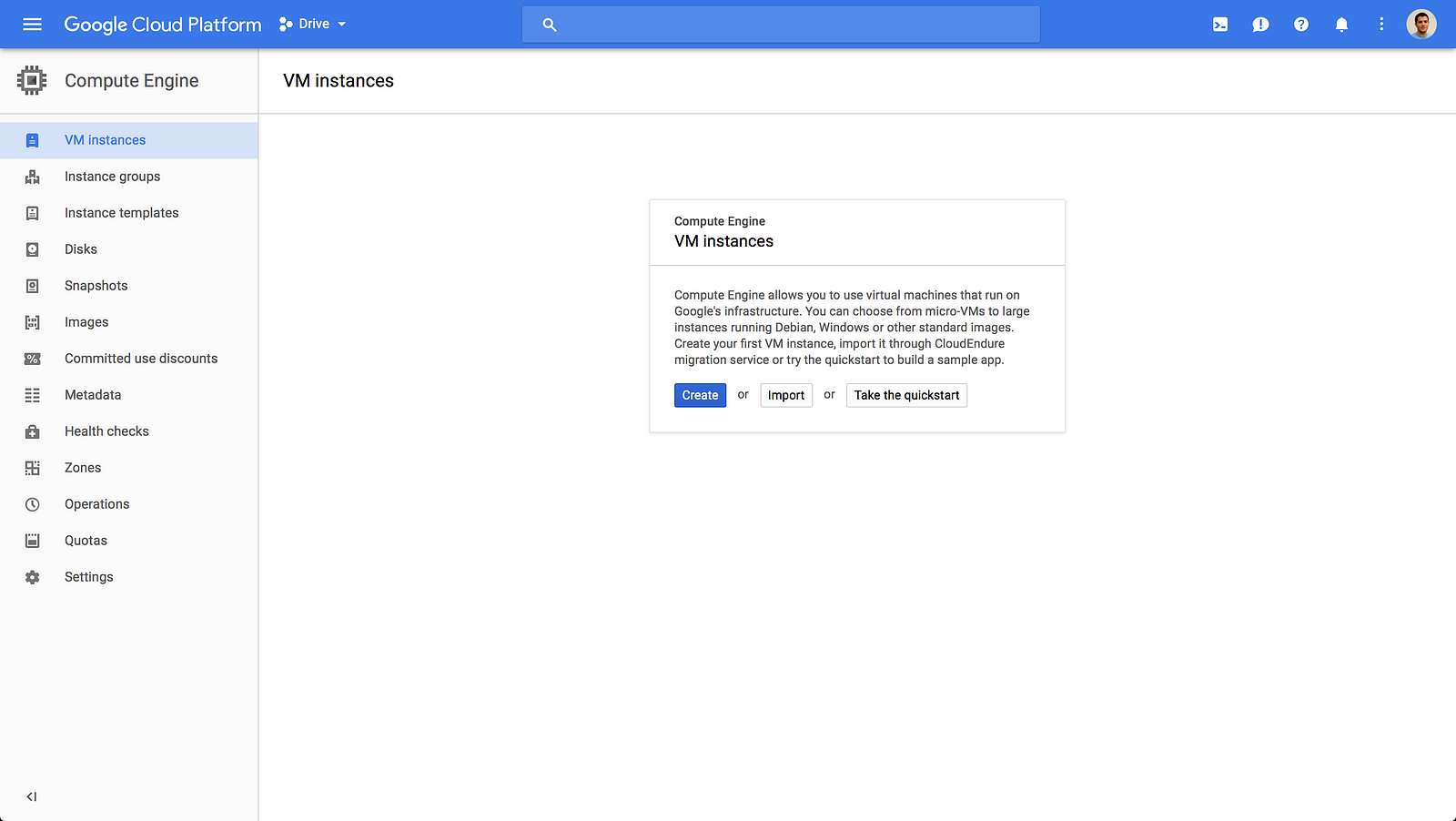

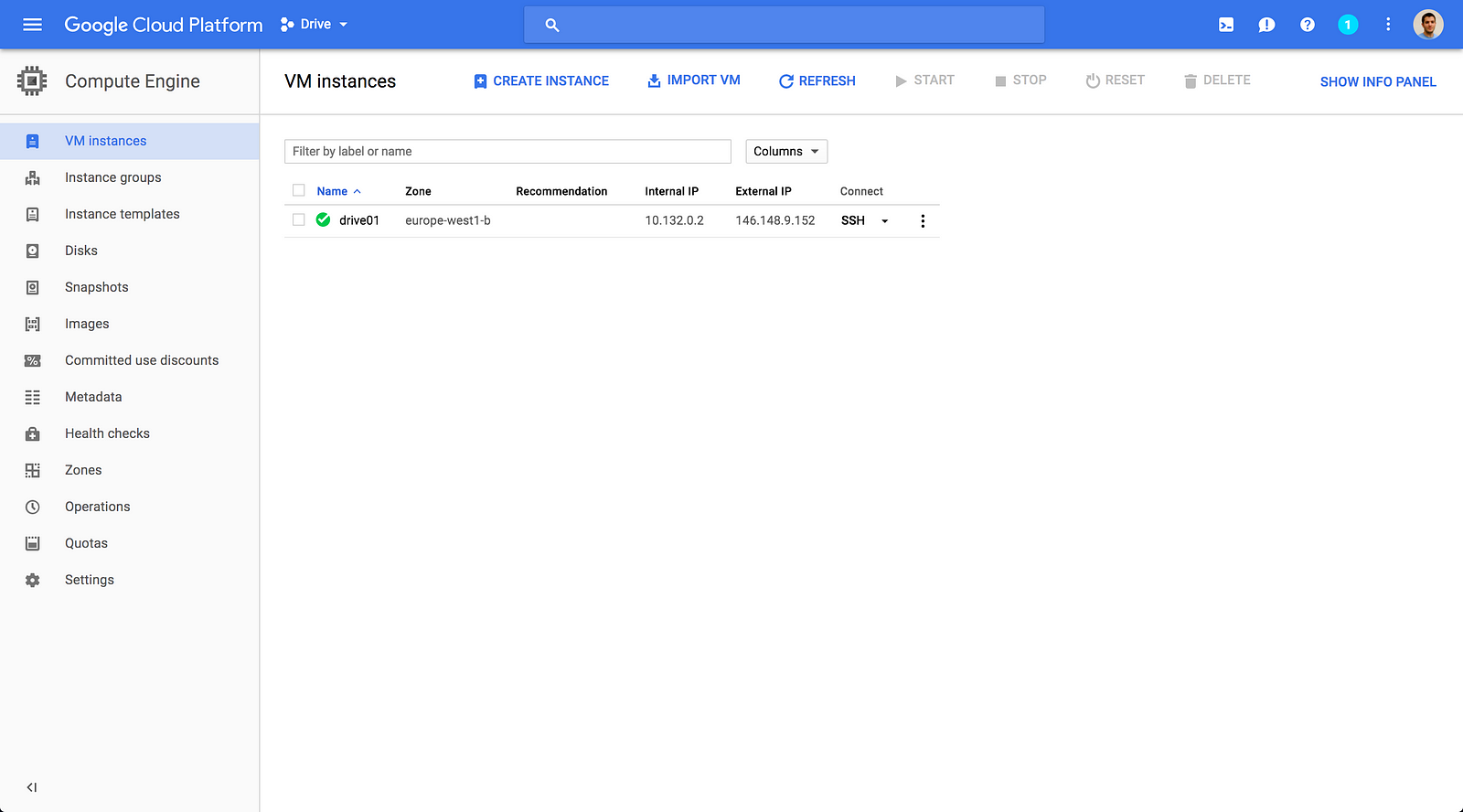

Click on the side menu again and go to “Compute Engine” > “VM Instances”.

You’ll be greeted with a notice inviting you to create your first instance. Click on “Create”.

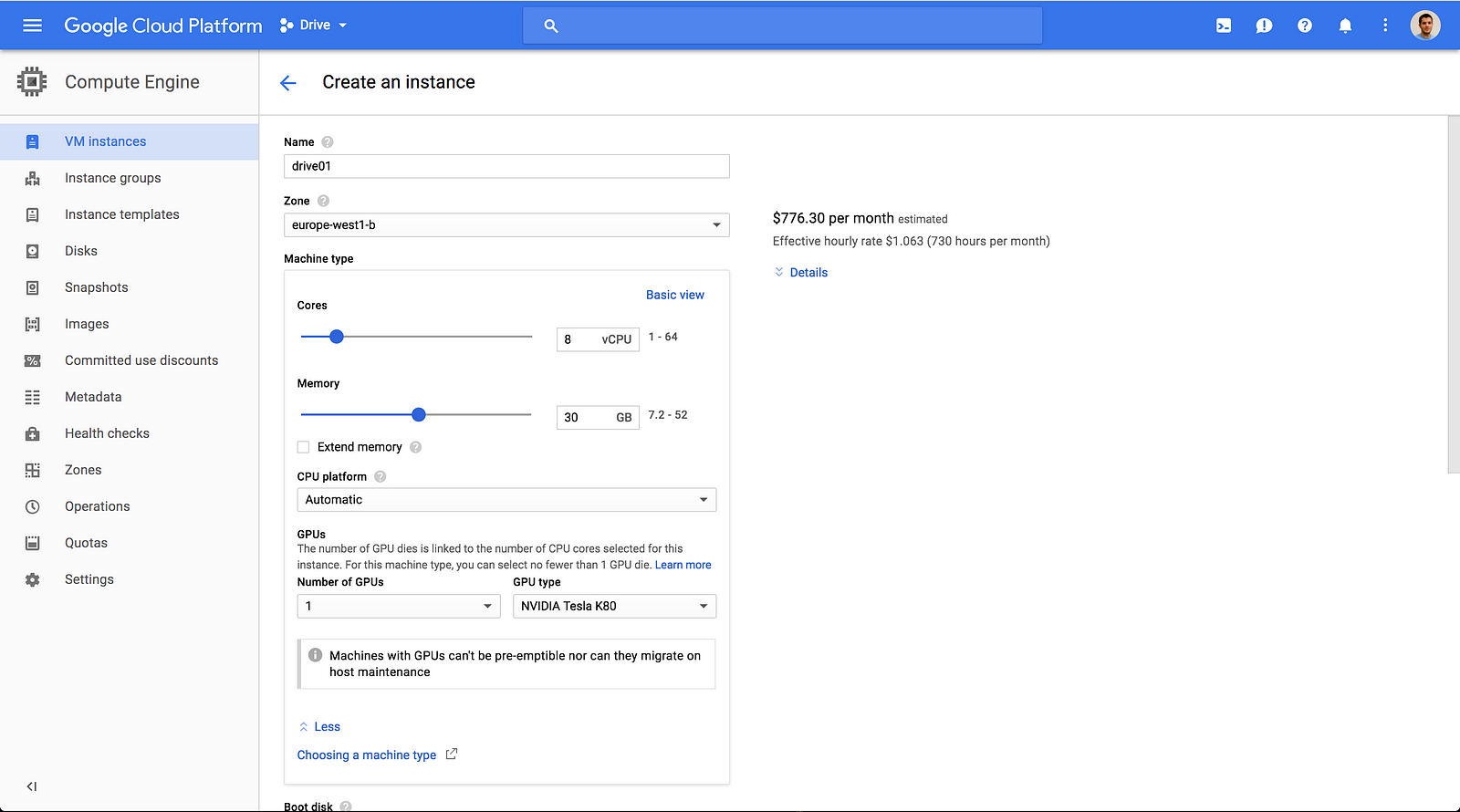

You have already chosen the specs for your server so this form will be pretty straightforward:

-

Instance name: I went with

drive01but it’s completely up to you! -

Zone:

europe-west-1b. You’re free to choose whichever zone you want but it needs to be a zone with GPU support. -

Instance type:

n1-standard-8, 8 vCPUs, 30GB RAM. - Click on “Customise” next to the instance type selection and click on “GPUS” to show the GPU creation form. Add 1 NVIDIA Tesla K80 using the “Number of GPUs” and “GPU type” dropdowns.

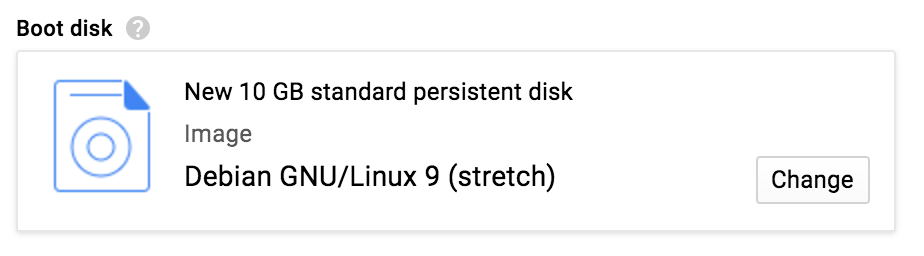

Next you need to select an OS and add a SSD Persistent Disk to your instance. Click on “Change” in the boot disk section.

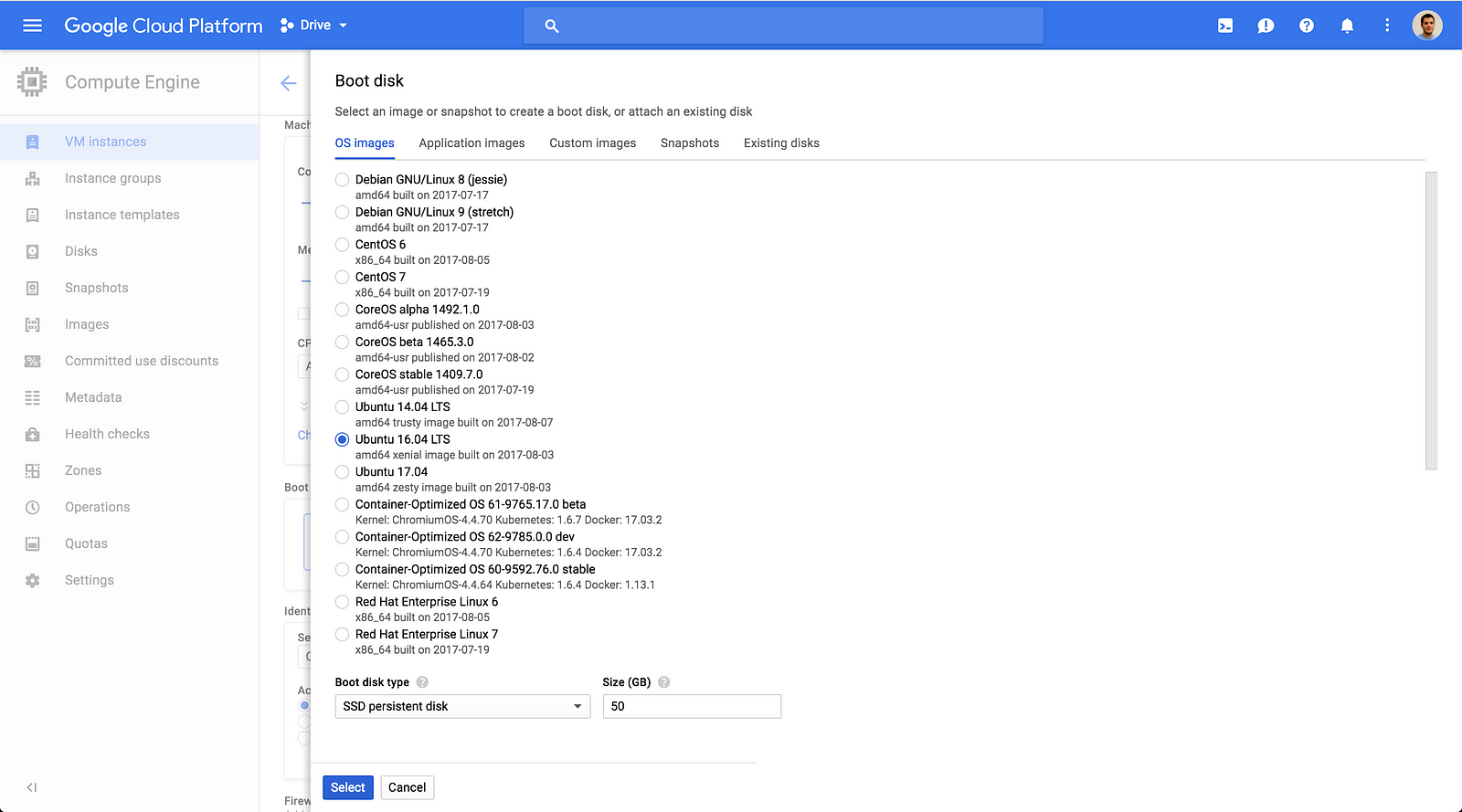

Use these settings:

-

OS:

Ubuntu 16.04 LTS. -

Boot disk type:

SSD Persistent Disk. -

Size:

50GB.

You are almost done.

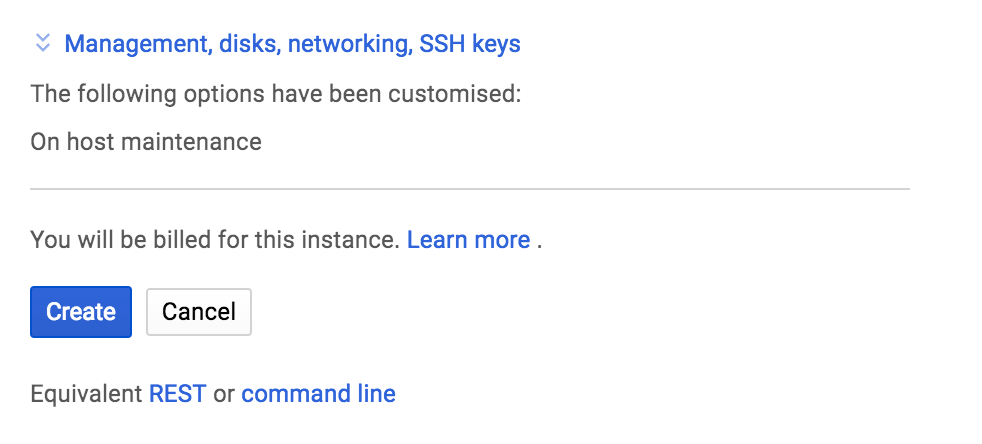

Click on “Management, disks, networking, SSH keys” at the bottom of the form.

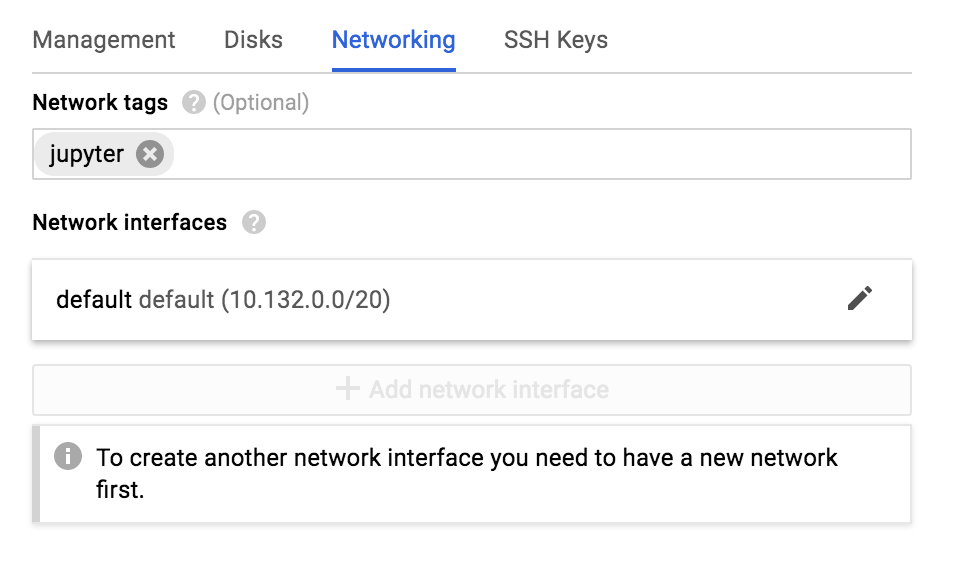

In the “Networking” section add the following network tag: jupyter. This will be useful later on when you configure the firewall.

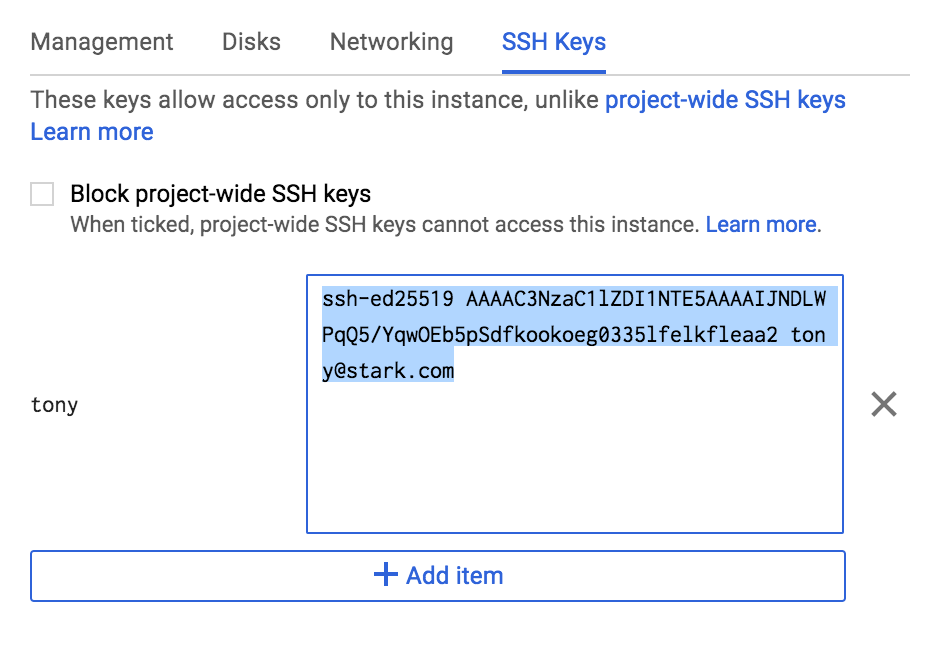

The last step is to attach your SSH key to the instance. Go to the “SSH Keys” section.

Copy and paste your SSH public key. It needs to have this format:

<protocol> <key-blob> <username@example.com>This is very important as <username> will end up being the username you use to access your server.

Click on “Create” at the bottom of the page.

Note the instance’s external IP for later.

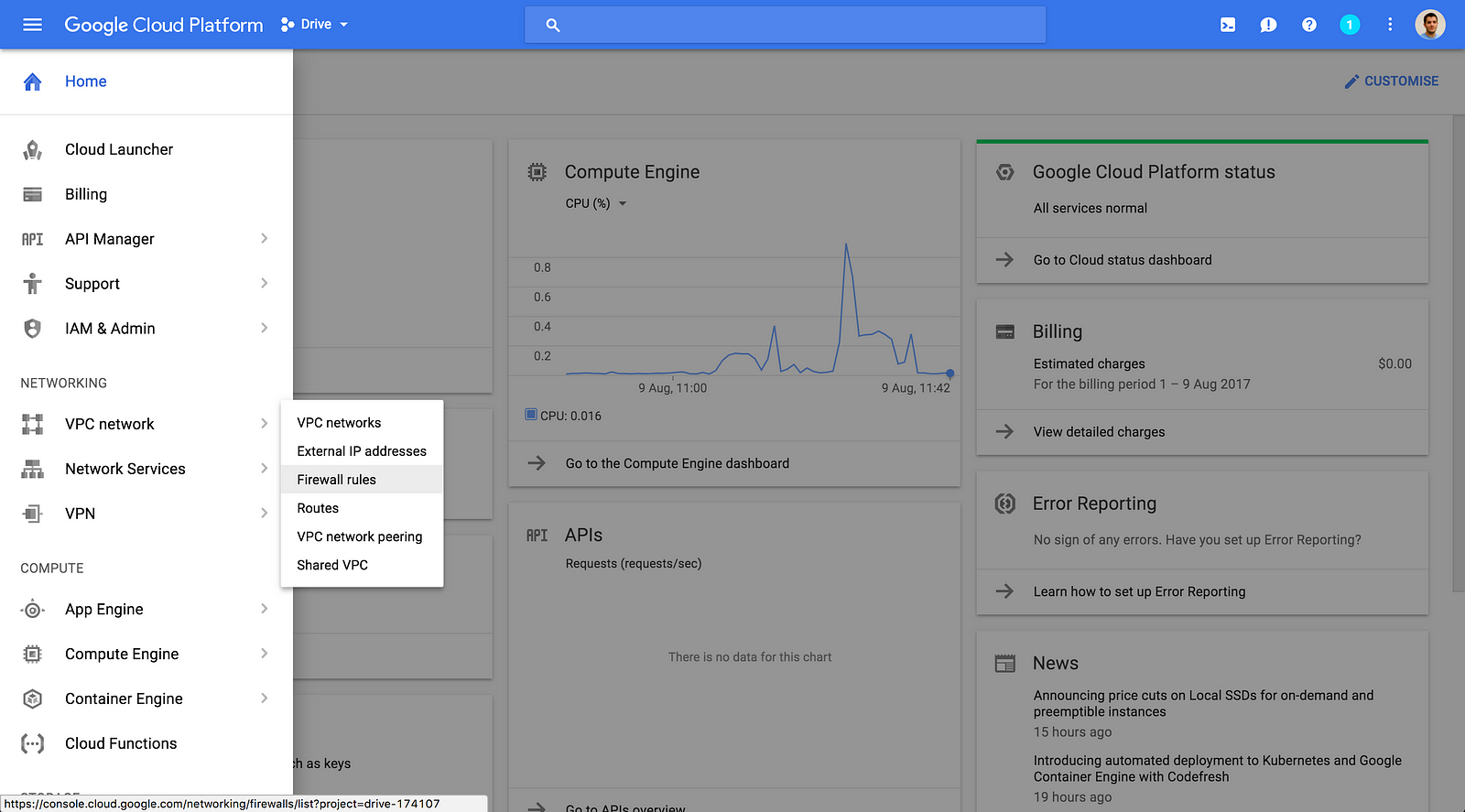

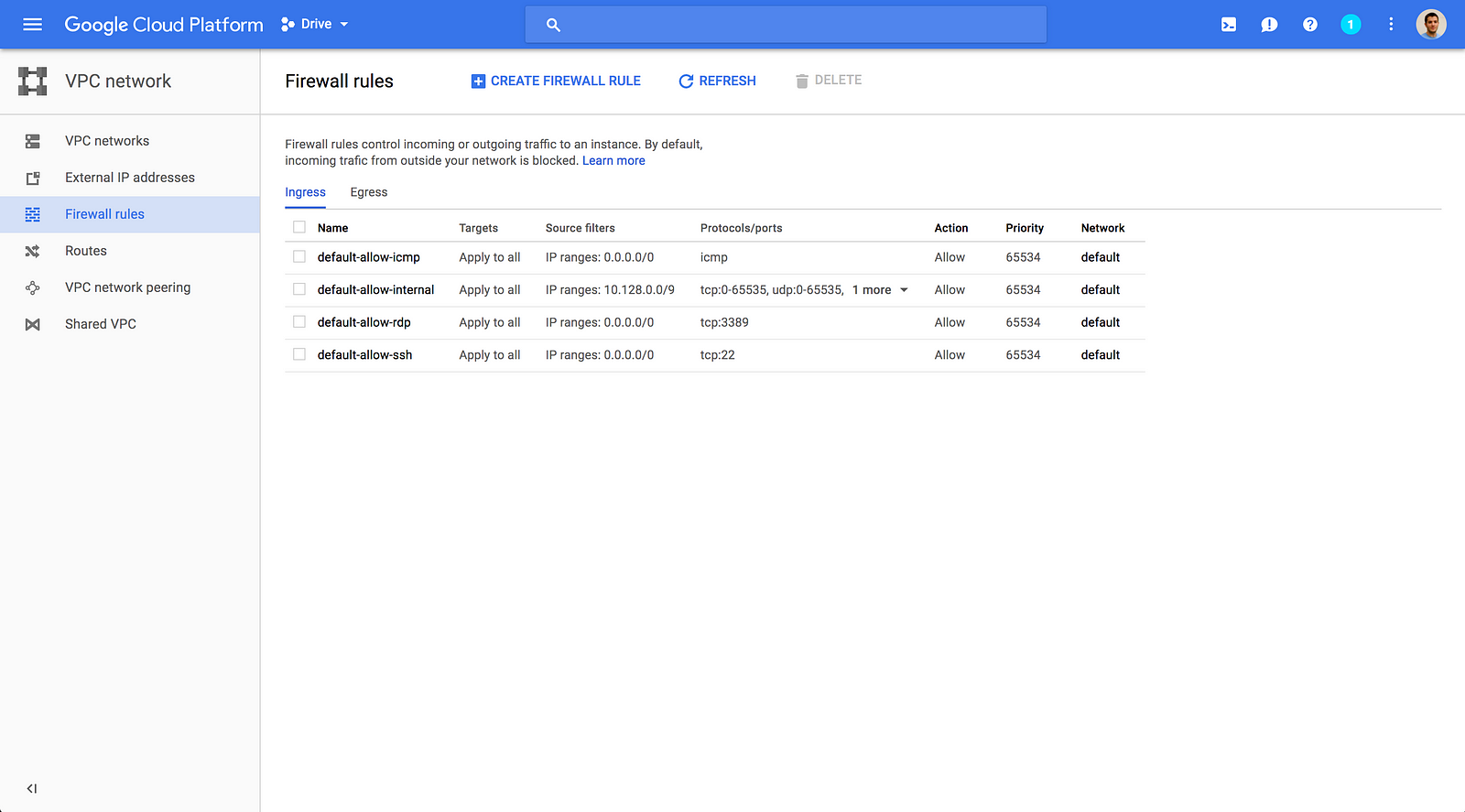

Adding a firewall rule

In order to access your Jupyter notebooks you need to add a firewall rule to allow incoming traffic on port 8888.

Using the side menu go to “VPC network” > “Firewall rules”.

Click on “Create firewall rule”.

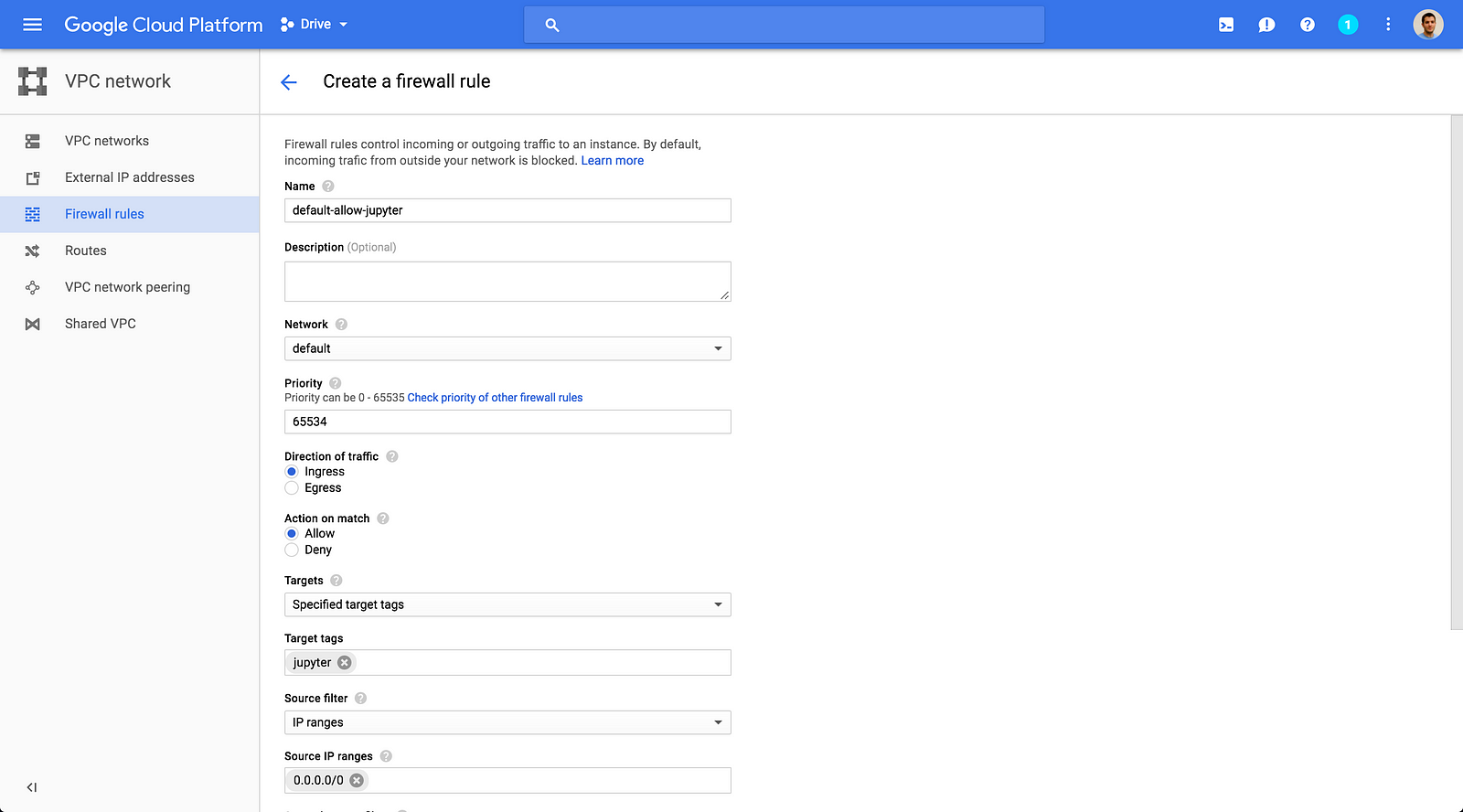

Put these values in the form:

-

Name:

default-allow-jupyter. -

Network:

default. -

Priority:

65534. -

Direction of traffic:

Ingress. We are only interested in allowing incoming traffic in. -

Action on match:

Allow. We want to allow traffic that matches that specific rule. -

Targets:

Specified target tags. We don’t want to apply this rule to all instances in the project, only to a select few. -

Target tags:

jupyter. Only the instances with the jupyter tag will have this firewall rule applied. -

Source filter:

IP ranges. -

Source IP ranges:

0.0.0.0/0. Traffic coming from any network will be allowed in. If you want to make this more restrictive you can put your own IP address. -

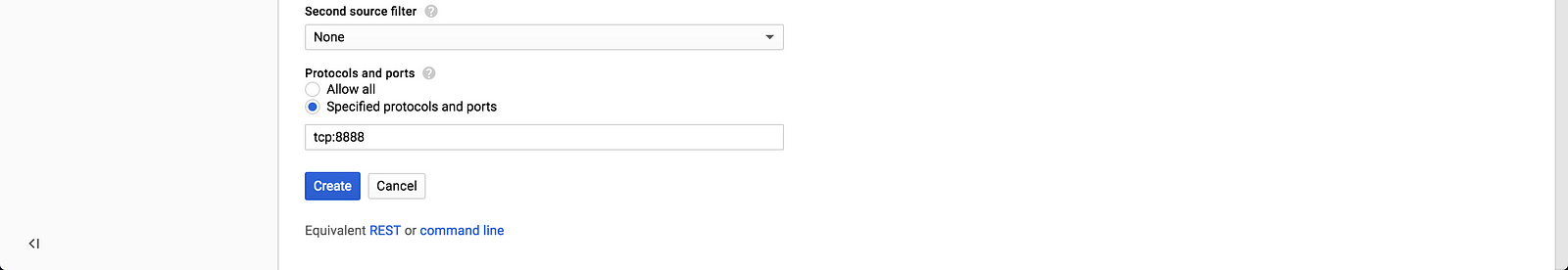

Protocols and ports:

tcp:8888. Only allow TCP traffic on port 8888.

Launching an instance – CLI edition

You can skip this section if you already started your server using the web-based console.

Installing Cloud SDK

Follow the guide available on the Google Cloud Platform documentation site to install the latest version of the Cloud SDK for your OS.

Creating a project

$ gcloud projects create "drive-cli" --name "Drive CLI"If your project ID is already taken feel free to append some random number to it.

Enable billing

Go to the following page to enable billing for your newly created project:

https://console.developers.google.com/billing/linkedaccount?project=[YOUR_PROJECT_ID]Note: I haven’t a way to do this from the CLI but if someone reading this does, please let me know in comment.

Requesting a quota increase

Before you can start an instance with an attached GPU you need to ensure that you have enough GPUs available in your selected region. Failing that, you will need to request a quota increase here:

https://console.cloud.google.com/iam-admin/quotas?project=[PROJECT_ID]Then you can follow the web version of this guide to request a quota increase.

Launching a VM instance

In order to create an instance with one or more attached GPUs you need to have at least version 144.0.0 of the gcloud command-line tool and the beta components installed.

$ gcloud version

Google Cloud SDK 165.0.0

beta 2017.03.24

bq 2.0.25

core 2017.07.28

gcloud

gsutil 4.27If you don’t meet these requirements, run the following command:

$ gcloud components update && gcloud components install betaCreate a file with the format below anywhere on your local machine:

[USERNAME]:[PUBLIC KEY FILE CONTENT]For instance (they key was shortened for practical reasons):

steve:ssh-rsa AAAAB3NzaC1y[...]c2EYSYVVw== steve@drive01Time to create your instance:

$ gcloud beta compute instances create drive01 \

--machine-type n1-standard-8 --zone europe-west1-b \

--accelerator type=nvidia-tesla-k80,count=1 \

--boot-disk-size 50GB --boot-disk-type pd-ssd \

--image-family ubuntu-1604-lts --image-project ubuntu-os-cloud \

--maintenance-policy TERMINATE --restart-on-failure \

--metadata-from-file ssh-keys=~/drive_public_keys \

--tags jupyter --project "[YOUR_PROJECT_ID]"The output should be similar to this if the command runs successfully

Created [https://www.googleapis.com/compute/beta/projects/drive-test/zones/europe-west1-b/instances/drive01].

NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS

drive01 europe-west1-b n1-standard-8 10.132.0.2 146.148.9.152 RUNNINGWrite down the external IP.

Adding a firewall rule

$ gcloud beta compute firewall-rules create "default-allow-jupyter" \

--network "default" --allow tcp:8888 \

--direction "ingress" --priority 65534 \

--source-ranges 0.0.0.0/0 \

--target-tags "jupyter" --project "[YOUR_PROJECT_ID]"Here is what the output should be:

Creating firewall...done.

NAME NETWORK DIRECTION PRIORITY ALLOW DENY

default-allow-jupyter default INGRESS 65534 tcp:8888Preparing the server

Now that your instance is launched and that the firewall is correctly setup it’s time to configure it.

Start by SSHing into your server using this command:

$ ssh -i ~/.ssh/my-ssh-key [USERNAME]@[EXTERNAL_IP_ADDRESS]

[EXTERNAL_IP_ADDRESS] is the address you wrote down a couple of steps earlier.

[USERNAME] is the username that was in your SSH key.

Since it’s you first time connecting to that server you will get the following prompt. Type “yes”.

The authenticity of host '146.148.9.152 (146.148.9.152)' can't be established.

ECDSA key fingerprint is SHA256:xll+CHEOChQygmnv0OjdFIiihAcx69slTjQYdymMLT8.

Are you sure you want to continue connecting (yes/no)? yesLet’s first update the system software and install some much needed dependencies:

$ sudo apt-get update

$ sudo apt-get upgrade

$ sudo apt-get install -y build-essentialLuckily Ubuntu ships with both Python 2 and Python 3 pre-installed so you can move directly to the next step: installing Miniconda.

$ cd /tmp

$ curl -O https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh

$ md5sum Miniconda3-latest-Linux-x86_64.shThe output from the last command should be something like:

c1c15d3baba15bf50293ae963abef853 Miniconda3-latest-Linux-x86_64.shThis is the MD5 sum of your downloaded file. You can compare it against the MD5 hashes found here to verify the integrity of your installer.

You can now run the install script:

$ Miniconda3-latest-Linux-x86_64.shThis will give you the following output:

Welcome to Miniconda3 4.3.21 (by Continuum Analytics, Inc.)

In order to continue the installation process, please review the license

agreement.

Please, press ENTER to continue

>>>

Press [ENTER] to continue, and then press [ENTER] again to read through the licence. Once you have reached the end you will be prompted to accept it:

Do you approve the license terms? [yes|no]

>>>Type “yes” if you agree.

The installer will now ask you to choose the location of your installation. Press [ENTER] to use the default location, or type a different location if you want to customise it:

Miniconda3 will now be installed into this location:

/home/steve/miniconda3

- Press ENTER to confirm the location

- Press CTRL-C to abort the installation

- Or specify a different location below

[/home/steve/miniconda3] >>>

The installation process will begin. Once it’s finished the installer will ask you whether you want it to prepend the install location to your PATH. This is needed to use the conda command in your shell so type “yes” to agree.

...

installation finished.

Do you wish the installer to prepend the Miniconda3 install location

to PATH in your /home/steve/.rc ? [yes|no]

[no] >>>Here is the final output from the installation process:

Prepending PATH=/home/steve/miniconda3/bin to PATH in /home/steve/.rc

A backup will be made to: /home/steve/.rc-miniconda3.bak

For this change to become active, you have to open a new terminal.

Thank you for installing Miniconda3!

Share your notebooks and packages on Anaconda Cloud!

Sign up for free: https://anaconda.org

Source your .rc file in order to complete the installation:

$ source ~/.rcYou can verify that Miniconda was successfully installed by typing the following command in your shell:

$ conda list

# packages in environment at /home/steve/miniconda3:

#

asn1crypto 0.22.0 py36_0

cffi 1.10.0 py36_0

conda 4.3.21 py36_0

conda-env 2.6.0 0

...Installing CUDA Toolkit

In order to reap all the benefits of the Tesla K80 mounted in your server you need to install the NVIDIA CUDA Toolkit.

First, check that your GPU is properly installed:

$ lspci | grep -i nvidia

00:04.0 3D controller: NVIDIA Corporation GK210GL [Tesla K80] (rev a1)At the time of writing the most recent version of CUDA Toolkit supported by Tensorflow is 8.0.61–1 so I’ll use this version throughout this guide. If you read this guide in the future, feel free to swap it with the newest version found on https://developer.nvidia.com/cuda-downloads.

$ cd /tmp

$ curl -O

https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/cuda-repo-ubuntu1604_8.0.61-1_amd64.debCompare the MD5 sum of this file against the one published here.

$ md5sum cuda-repo-ubuntu1604_8.0.61-1_amd64.deb

1f4dffe1f79061827c807e0266568731 cuda-repo-ubuntu1604_8.0.61-1_amd64.debIf they match, you can proceed to the next step:

$ sudo dpkg -i cuda-repo-ubuntu1604_8.0.61-1_amd64.deb

$ sudo apt-get update

$ sudo apt-get install -y cuda-8-0

Run this command to add some environment variables to your .rc file:

$ cat <<EOF >> ~/.rc

export CUDA_HOME=/usr/local/cuda-8.0

export LD_LIBRARY_PATH=\${CUDA_HOME}/lib64

export PATH=\${CUDA_HOME}/bin:\${PATH}

EOF

Source your .rc file again:

$ source ~/.rcNVIDIA provides some sample program that will allow us to test the installation. Copy them into your home directory and build one:

$ cuda-install-samples-8.0.sh ~

$ cd ~/NVIDIA_CUDA-8.0_Samples/1_Utilities/deviceQuery

$ make

If it goes well you can now run the deviceQuery utility to verify your CUDA installation.

$ ./deviceQuery

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "Tesla K80"

CUDA Driver Version / Runtime Version 8.0 / 8.0

CUDA Capability Major/Minor version number: 3.7

Total amount of global memory: 11440 MBytes (11995578368 bytes)

(13) Multiprocessors, (192) CUDA Cores/MP: 2496 CUDA Cores

...

You can also use the nvidia-smi utility to verify that the driver is running properly:

$ nvidia-smi

Thu Aug 10 14:58:47 2017

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 375.66 Driver Version: 375.66 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla K80 Off | 0000:00:04.0 Off | 0 |

| N/A 33C P0 57W / 149W | 0MiB / 11439MiB | 100% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+Installing cuDNN

cuDNN is a GPU-accelerated library of primitives for deep neural networks provided by NVIDIA. If it is required by Tensorflow when you install the version with GPU support.

Tensorflow 1.2.1 (the version we are using in this guide) only supports cuDNN 5.1.

In order to download the library you will have to register for a NVIDIA developer account here.

Once your account is created your can download cuDNN here (you will have to login).

Agree to the terms and download the “cuDNN v5.1 for Linux” archive on your local computer. Be sure to use the version for CUDA 8.0.

Run these commands to go into the directory where the archive was downloaded (in my case it’s ~/Downloads) and upload it to your server:

$ cd ~/Downloads/

$ scp -i ~/.ssh/my-ssh-key cudnn-8.0-linux-x64-v5.1.tgz [USERNAME]@[EXTERNAL_IP_ADDRESS]:/tmpOnce it’s successfully uploaded, uncompress and copy the cuDNN library to the CUDA toolkit directory:

$ cd /tmp

$ tar xvzf cudnn-8.0-linux-x64-v5.1.tgz

$ sudo cp -P cuda/include/cudnn.h $CUDA_HOME/include

$ sudo cp -P cuda/lib64/libcudnn* $CUDA_HOME/lib64

$ sudo chmod u+w $CUDA_HOME/include/cudnn.h

$ sudo chmod a+r $CUDA_HOME/lib64/libcudnn*Accessing Github repos

Being able to push and pull to your repos on Github directly from the server is way more convenient than having to synchronise files on your laptop first. In order to enable that workflow you will add a SSH key specific to this server to your Github account.

First, generate a SSH key pair on your server:

$ ssh-keygen -o -a 100 -t ed25519 -f ~/.ssh/id_ed25519 -C steve@drive01Enter a passphrase and choose a location for your key when you are prompted.

Read the content of the public key and copy it to your clibboard:

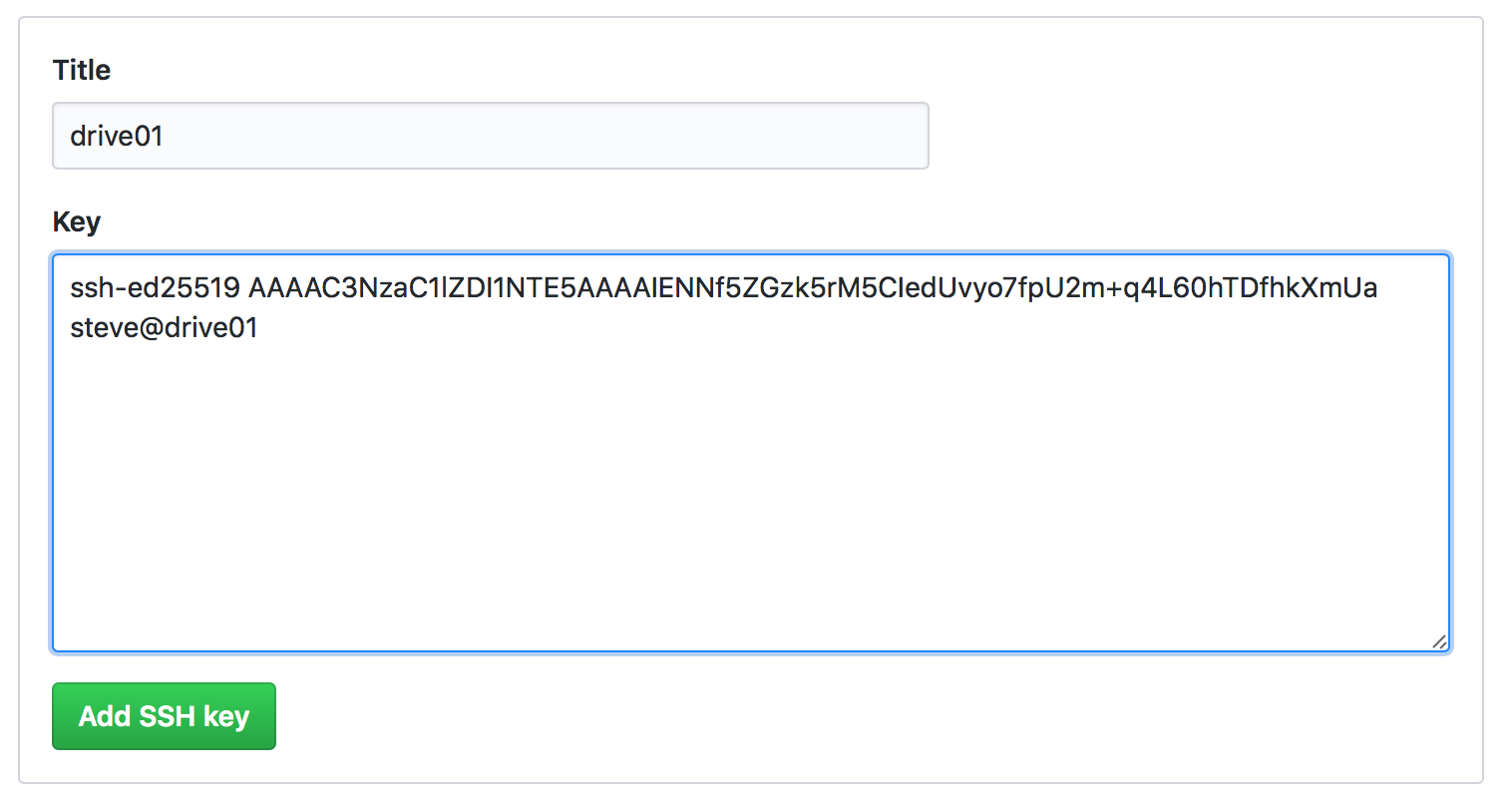

$ cat ~/.ssh/id_ed25519.pubHead to your Github SSH and GPG keys settings, click on “New SSH key”, give a name to your key in the “Title” section and paste the key itself in the “Key” section.

Once the key is added, you can go back to your server.

Setting up the CarND environment

Create a new folder named carnd in your home directory and clone the CarND-Term1-Starter-Kit repository:

$ mkdir ~/carnd

$ cd ~/carnd

$ git clone git@github.com:udacity/CarND-Term1-Starter-Kit.git

$ cd CarND-Term1-Starter-KitThere is one minor change we need to do in the environment-gpu.yml file provided by Udacity, before we create the conda environment.

Using vim, nano or whichever text editor you are most familiar with, change this:

# environment-gpu.yml

name: carnd-term1

channels:

- https://conda.anaconda.org/menpo

- conda-forge

dependencies:

- python==3.5.2

- numpy

- matplotlib

- jupyter

- opencv3

- pillow

- scikit-learn

- scikit-image

- scipy

- h5py

- eventlet

- flask-socketio

- seaborn

- pandas

- ffmpeg

- imageio=2.1.2

- pyqt=4.11.4

- pip:

- moviepy

- https://storage.googleapis.com/tensorflow/linux/gpu/tensorflow_gpu-0.12.1-cp35-cp35m-linux_x86_64.whl

- keras==1.2.1into this:

# environment-gpu.yml

name: carnd-term1

channels:

- https://conda.anaconda.org/menpo

- conda-forge

dependencies:

- python==3.5.2

- numpy

- matplotlib

- jupyter

- opencv3

- pillow

- scikit-learn

- scikit-image

- scipy

- h5py

- eventlet

- flask-socketio

- seaborn

- pandas

- ffmpeg

- imageio=2.1.2

- pyqt=4.11.4

- pip:

- moviepy

- tensorflow-gpu==1.2.1

- keras==1.2.1This change will ensure you grab the latest available version of Tensorflow with GPU support.

You are now ready to create the conda environment:

$ conda env create -f environment-gpu.ymlThis command will pull all the specified depencies. It may take a little while.

If it successfully creates the environment you should see this output in your console:

...

Successfully installed backports.weakref-1.0rc1 decorator-4.0.11 keras-1.2.1 markdown-2.6.8 moviepy-0.2.3.2 protobuf-3.3.0 tensorflow-gpu-1.2.1 theano-0.9.0 tqdm-4.11.2

#

# To activate this environment, use:

# > source activate carnd-term1

#

# To deactivate this environment, use:

# > source deactivate carnd-term1Time to try your new install!

Activate the environment:

$ source activate carnd-term1Run this short TensorFlow program in a python shell:

(carnd-term1) $ python

>>> import tensorflow as tf

>>> hello = tf.constant('Hello, TensorFlow!')

>>> sess = tf.Session()

>>> print(sess.run(hello))

b'Hello, TensorFlow!'If you get this output then congratulations, your server is ready for the Udacity Self-Driving Car Nanodegree projects.

If you get an error message, see this section on the Tensorflow website for common installation problems or leave a comment here.

The Lab

Now that your server is ready, it’s time to put it to good use.

I’ll run through how to use your server using the LeNet lab as an example but these steps apply to any other Jupyter-based lab in the course.

Jupyter config

In order to access your Jupyter notebook you need to edit the Jupyter config so that the server binds on all interfaces rather than localhost.

$ jupyter notebook --generate-config

This command will generate a config file at ~/.jupyter/jupyter_notebook_config.py.

Using a text editor, replace this line:

#c.NotebookApp.ip = 'localhost'with this:

c.NotebookApp.ip = '*'Next time you launch a Jupyter notebook the internal server will bind on all interfaces instead of localhost, allowing you to access the notebook in your browser.

Launching the notebook

First, let’s retrieve the content of the lab by cloning the repository from Github.

Run these commands on your server:

$ cd ~/carnd

$ git clone https://github.com/udacity/CarND-LeNet-Lab.git

$ cd CarND-LeNet-Lab/If your conda environment is not active already, activate it now:

$ source activate carnd-term1Launch the notebook:

(carnd-term1) $ jupyter notebookRunning the lab

On your local machine, open your browser and head to:

http://[EXTERNAL_IP_ADDRESS]:8888If it’s not open already, click on “LeNet-Lab-Solution.ipynb” to launch the LeNet lab solution notebook.

Run each cell in the notebook. The network with the hyper parameters given in the solution notebook should be trained in a minute or so on that machine.

Tada! You have a fully functioning GPU instance.

Important: You are billed for each minute your server is on so don’t forget to stop it once you are done using it. Note that you will still be paying a small amount for storage (~$8/month for 50GB) until you terminate the instance. Check this guide to learn how to stop or delete an instance.

Conclusion

Hopefully this guide convinced you that setting up a GPU-backed instance on Google Cloud Platform is as easy as on AWS.

In order to keep this guide as short and digestable as possible (I really tried!) I glossed over some very important topics:

- Server security: there are a few configuration steps that one should take early on as part of the of basic setup in order to increase the security of the server.

- Application security: your Jupyter notebooks are currently accessible to anyone with your server’s IP address. You can easily update the firewall rule to only allow access from your own IP and change the Jupyter configuration to require a password and use SSL.

- Automation: doing all this work by hand is obviously quite tedious and it would make sense to use a configuration management software or a tool like Terraform to automate parts, if not all, of it. Further, you could create an image out of your fully configured server or at the very least take a snapshot so that you avoid starting from scratch next time you boot an instance.

I hope you enjoyed it, if you have any question or comment please free to get in touch using the comment section below or by emailing me directly.